Workers

Perhaps Workers were customizable AI entities developed by Perhaps, designed to enhance the accessibility, usefulness, and transparency of LLM-powered tools. The product emphasized a balance of utility and expressiveness, combining technical sophistication with playful user-facing features.

Origins[edit]

According to an explanation on Perhaps' website, the CEO Gonzalo Enei said it was built for the following:

"What does a future where we consider AI as true members of our team look like? We built a platform where people can create an simple version of such entities, granting them names, personalities, looks, and training in any specialized topic. They can then answer questions on those matters with their own distinctive flair. Some even participate in the company communication channels."

Features[edit]

Customization and Specialization

Each assistant featured a unique visual identity and a specialized knowledge domain. The creation process was intentionally designed to be enjoyable and accessible, encouraging users to engage deeply with the setup experience.(source)

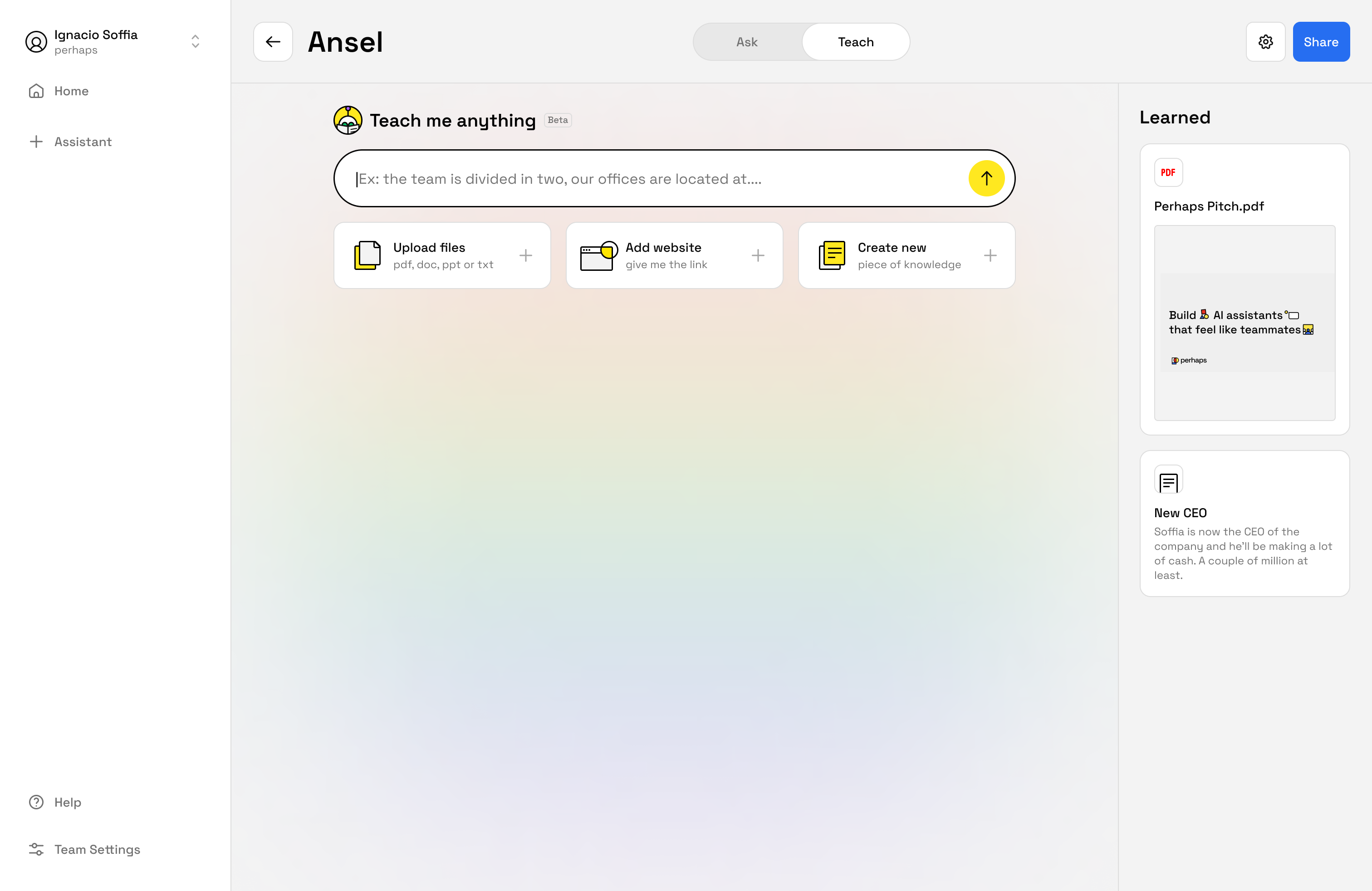

Knowledge Injection and On-the-Fly Learning

Assistants could be trained with uploaded documents or entire websites as knowledge bases. Significant engineering effort was invested in building a robust Retrieval-Augmented Generation (RAG) pipeline to ensure relevance and precision.

Assistants could also be taught new information interactively and in real time.(source 1)(source 2)

Feedback and Iteration Loops

Assistant creators received notifications when their assistants failed to provide answers, enabling a continuous feedback and refinement loop.(source)

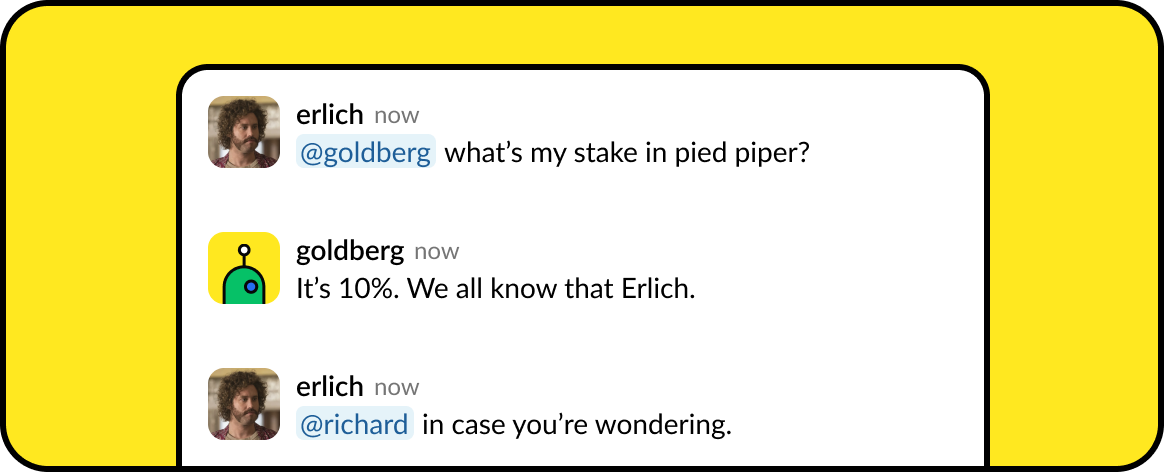

Multimodal Deployment

Perhaps Assistants could be deployed in multiple environments, including Slack, allowing teams to integrate AI help directly into their existing workflows.

Reactive Backdrop

The Reactive Backdrop was a visual layer that reflected the assistant's internal state or intent during interactions. It served to build user trust and improve the legibility of AI behavior.(source)

Transparency Through Citations

To improve answer traceability, assistants displayed inline citations showing where specific facts or conclusions were sourced.

Reactive Backdrop: Experimenting in the AI-UI Era[edit]

The Reactive Backdrop was introduced by Perhaps as an early-stage contribution to the evolving grammar of AI-native interfaces. In a landscape reshaped by the ubiquity of LLMs, traditional chat UI patterns, borrowed from human-to-human communication, were increasingly ill-suited for machine interlocutors. The Reactive Backdrop aimed to reimagine visual cues for a fundamentally new interaction paradigm.

Conceptual Foundation

The core insight behind the Reactive Backdrop was that interfaces for communicating with AI should not mimic those designed for human interaction. Just as autonomous vehicles will eventually diverge from the design of traditional cars, AI UIs require their own affordances and symbolic vocabulary. The team at Perhaps argued that a new class of signals, more ambient, expressive, and responsive, would help users make sense of AI behavior.

Design Goals

The Reactive Backdrop was designed with two primary objectives: feeling and feedback.

For Feeling, the backdrop gave assistants a subtle sense of presence and personality, beyond static avatars or text prompts. Each assistant had a bespoke animated gradient palette that made it feel "alive," reinforcing the assistant's identity as a unique and responsive entity. This contrasted sharply with the more sterile presentation of GPTs, which lacked visual distinctiveness despite their functional sophistication.

For Feedback, the backdrop served as a soft but persistent indicator of the assistant's internal state. Blue meant active thinking, green indicated successful knowledge ingestion, red signaled failure, and a rainbow gradient marked an editable "teaching" state. These changes paralleled human communication signals like tone or expression, offering users a richer, more embodied sense of interaction flow.

Broader Implications

The Reactive Backdrop echoed broader trends in AI-UI—streaming text, context-aware visuals, and multimodal responsiveness—but offered a fresh take by embedding those ideas into the ambient layer of the interface. It emphasized that trust, usability, and even delight in AI tools could emerge from subtle design gestures, not just raw model performance.

While influenced by precedents like Siri and ChatGPT for iOS, the implementation was original in its synthesis of personalization, interaction state, and continuous visual dynamism. As of its release, few case studies had addressed this dimension of AI interaction design, positioning the Reactive Backdrop as a notable early experiment in the space.

Inline Citations[edit]

As part of its broader effort to make LLM-powered assistants more credible and user-trustworthy, Perhaps implemented inline citations, a system for referencing specific sources within generated responses. This approach addressed a common shortcoming of LLMs: their tendency to "hallucinate" facts without clear attribution.

Implementation

The team applied a structured RAG (Retrieval-Augmented Generation) setup that injected labeled pieces of knowledge into the model's context window. These were formatted with a unique citationId, a human-readable title, and the source content. A system message instructed the model to include citation references inline using the correct citationId format.

The model learned to reference only the relevant citations and ignore misleading or off-topic data, increasing both factual accuracy and user confidence.

UX Enhancements

To improve legibility, citations were post-processed with regular expressions to convert citationIds into clickable links or tooltips, showing their titles and sources. IDs avoided vowels to prevent the model from semantically interpreting them, reducing confusion. Citations were also reordered to match their appearance in the text, mimicking academic writing norms.

Strategic Implications

This feature wasn't just cosmetic. Citations encouraged more cautious LLM behavior, subtly guiding the model toward higher factual rigor, analogous to how humans adjust speech when asked to provide sources. It also allowed Perhaps assistants to reference not only static docs and URLs, but also learned knowledge acquired through teaching interactions.

In doing so, Perhaps bridged the gap between generative fluency and epistemic accountability, making assistants that not only talk well but cite better.

References

1. Perhaps Inc. (December 2023). Tweet about customization features. X (formerly Twitter).

2. Cacoos. (December 2023). Tweet about on-the-fly learning. X (formerly Twitter).

3. Perhaps Inc. (January 2024). Tweet about teaching capabilities. X (formerly Twitter).

4. Enei, Gonzalo. (December 2023). Tweet about feedback loops. X (formerly Twitter).

5. Perhaps Inc. (2024). Reactive Backdrop: experimenting in the AI UI era.

6. Soffia, Ignacio. (January 2024). Tweet about Reactive Backdrop. X (formerly Twitter).